MIT Technology Review’s What’s Next series looks across industries, trends, and technologies to give you a first look at the future. You can read the rest of them here.

Thanks to the boom in artificial intelligence, the world of chips is on the cusp of a huge tidal shift. There is heightened demand for chips that can train AI models faster and ping them from devices like smartphones and satellites, enabling us to use these models without disclosing private data. Governments, tech giants, and startups alike are racing to carve out their slices of the growing semiconductor pie.

Here are four trends to look for in the year ahead that will define what the chips of the future will look like, who will make them, and which new technologies they’ll unlock.

CHIPS Acts around the world

On the outskirts of Phoenix, two of the world’s largest chip manufacturers, TSMC and Intel, are racing to construct campuses in the desert that they hope will become the seats of American chipmaking prowess. One thing the efforts have in common is their funding: in March, President Joe Biden announced $8.5 billion in direct federal funds and $11 billion in loans for Intel’s expansions around the country. Weeks later, another $6.6 billion was announced for TSMC.

The awards are just a portion of the US subsidies pouring into the chips industry via the $280 billion CHIPS and Science Act signed in 2022. The money means that any company with a foot in the semiconductor ecosystem is analyzing how to restructure its supply chains to benefit from the cash. While much of the money aims to boost American chip manufacturing, there’s room for other players to apply, from equipment makers to niche materials startups.

But the US is not the only country trying to onshore some of the chipmaking supply chain. Japan is spending $13 billion on its own equivalent to the CHIPS Act, Europe will be spending more than $47 billion, and earlier this year India announced a $15 billion effort to build local chip plants. The roots of this trend go all the way back to 2014, says Chris Miller, a professor at Tufts University and author of Chip War: The Fight for the World’s Most Critical Technology. That’s when China started offering massive subsidies to its chipmakers.

“This created a dynamic in which other governments concluded they had no choice but to offer incentives or see firms shift manufacturing to China,” he says. That threat, coupled with the surge in AI, has led Western governments to fund alternatives. In the next year, this might have a snowball effect, with even more countries starting their own programs for fear of being left behind.

The money is unlikely to lead to brand-new chip competitors or fundamentally restructure who the biggest chip players are, Miller says. Instead, it will mostly incentivize dominant players like TSMC to establish roots in multiple countries. But funding alone won’t be enough to do that quickly—TSMC’s effort to build plants in Arizona has been mired in missed deadlines and labor disputes, and Intel has similarly failed to meet its promised deadlines. And it’s unclear whether, whenever the plants do come online, their equipment and labor force will be capable of the same level of advanced chipmaking that the companies maintain abroad.

“The supply chain will only shift slowly, over years and decades,” Miller says. “But it is shifting.”

More AI on the edge

Currently, most of our interactions with AI models like ChatGPT are done via the cloud. That means that when you ask GPT to pick out an outfit (or to be your boyfriend), your request pings OpenAI’s servers, prompting the model housed there to process it and draw conclusions (known as “inference”) before a response is sent back to you. Relying on the cloud has some drawbacks: it requires internet access, for one, and it also means some of your data is shared with the model maker.

That’s why there’s been a lot of interest and investment in edge computing for AI, where the process of pinging the AI model happens directly on your device, like a laptop or smartphone. With the industry increasingly working toward a future in which AI models know a lot about us (Sam Altman described his killer AI app to me as one that knows “absolutely everything about my whole life, every email, every conversation I’ve ever had”), there’s a demand for faster “edge” chips that can run models without sharing private data. These chips face different constraints from the ones in data centers: they typically have to be smaller, cheaper, and more energy efficient.

The US Department of Defense is funding a lot of research into fast, private edge computing. In March, its research wing, the Defense Advanced Research Projects Agency (DARPA), announced a partnership with chipmaker EnCharge AI to create an ultra-powerful edge computing chip used for AI inference. EnCharge AI is working to make a chip that enables enhanced privacy but can also operate on very little power. This will make it suitable for military applications like satellites and off-grid surveillance equipment. The company expects to ship the chips in 2025.

AI models will always rely on the cloud for some applications, but new investment and interest in improving edge computing could bring faster chips, and therefore more AI, to our everyday devices. If edge chips get small and cheap enough, we’re likely to see even more AI-driven “smart devices” in our homes and workplaces. Today, AI models are mostly constrained to data centers.

“A lot of the challenges that we see in the data center will be overcome,” says EnCharge AI cofounder Naveen Verma. “I expect to see a big focus on the edge. I think it’s going to be critical to getting AI at scale.”

Big Tech enters the chipmaking fray

In industries ranging from fast fashion to lawn care, companies are paying exorbitant amounts in computing costs to create and train AI models for their businesses. Examples include models that employees can use to scan and summarize documents, as well as externally facing technologies like virtual agents that can walk you through how to repair your broken fridge. That means demand for cloud computing to train those models is through the roof.

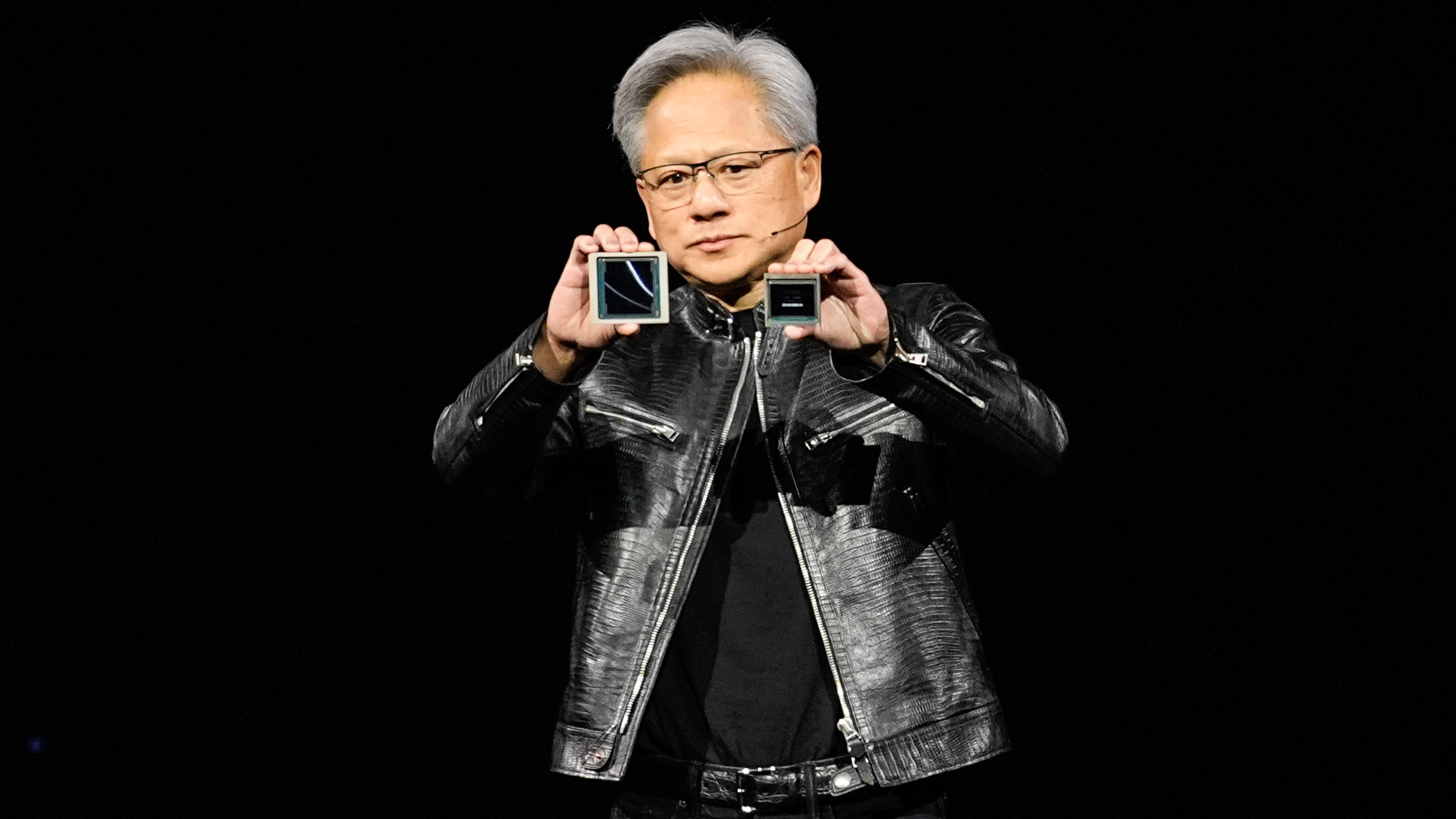

The companies providing the bulk of that computing power are Amazon, Microsoft, and Google. For years these tech giants have dreamed of increasing their profit margins by making chips for their data centers in-house rather than buying from companies like Nvidia, a giant with a near monopoly on the most advanced AI training chips and a value larger than the GDP of 183 countries.

Amazon started its effort in 2015, acquiring startup Annapurna Labs. Google moved next in 2018 with its own chips called TPUs. Microsoft launched its first AI chips in November, and Meta unveiled a new version of its own AI training chips in April.

That trend could tilt the scales away from Nvidia. But Nvidia doesn’t only play the role of rival in the eyes of Big Tech: regardless of their own in-house efforts, cloud giants still need its chips for their data centers. That’s partly because their own chipmaking efforts can’t fulfill all their needs, but it’s also because their customers expect to be able to use top-of-the-line Nvidia chips.

“This is really about giving the customers the choice,” says Rani Borkar, who leads hardware efforts at Microsoft Azure. She says she can’t envision a future in which Microsoft supplies all chips for its cloud services: “We will continue our strong partnerships and deploy chips from all the silicon partners that we work with.”

As cloud computing giants attempt to poach a bit of market share away from chipmakers, Nvidia is also attempting the converse. Last year the company started its own cloud service so customers can bypass Amazon, Google, or Microsoft and get computing time on Nvidia chips directly. As this dramatic struggle over market share unfolds, the coming year will be about whether customers see Big Tech’s chips as akin to Nvidia’s most advanced chips, or more like their little cousins.

Nvidia battles the startups

Despite Nvidia’s dominance, there is a wave of investment flowing toward startups that aim to outcompete it in certain slices of the chip market of the future. Those startups all promise faster AI training, but they have different ideas about which flashy computing technology will get them there, from quantum to photonics to reversible computation.

But Murat Onen, the 28-year-old founder of one such chip startup, Eva, which he spun out of his PhD work at MIT, is blunt about what it’s like to start a chip company right now.

“The king of the hill is Nvidia, and that’s the world that we live in,” he says.

Many of these companies, like SambaNova, Cerebras, and Graphcore, are trying to change the underlying architecture of chips. Imagine an AI accelerator chip as constantly having to shuffle data back and forth between different areas: a piece of information is stored in the memory zone but must move to the processing zone, where a calculation is made, and then be stored back to the memory zone for safekeeping. All that takes time and energy.

Making that process more efficient would deliver faster and cheaper AI training to customers, but only if the chipmaker has good enough software to allow the AI training company to seamlessly transition to the new chip. If the software transition is too clunky, model makers such as OpenAI, Anthropic, and Mistral are likely to stick with big-name chipmakers.That means companies taking this approach, like SambaNova, are spending a lot of their time not just on chip design but on software design too.

Onen is proposing changes one level deeper. Instead of traditional transistors, which have delivered greater efficiency over decades by getting smaller and smaller, he’s using a new component called a proton-gated transistor that he says Eva designed specifically for the mathematical needs of AI training. It allows devices to store and process data in the same place, saving time and computing energy. The idea of using such a component for AI inference dates back to the 1960s, but researchers could never figure out how to use it for AI training, in part because of a materials roadblock—it requires a material that can, among other qualities, precisely control conductivity at room temperature.

One day in the lab, “through optimizing these numbers, and getting very lucky, we got the material that we wanted,” Onen says. “All of a sudden, the device is not a science fair project.” That raised the possibility of using such a component at scale. After months of working to confirm that the data was correct, he founded Eva, and the work was published in Science.

But in a sector where so many founders have promised—and failed—to topple the dominance of the leading chipmakers, Onen frankly admits that it will be years before he’ll know if the design works as intended and if manufacturers will agree to produce it. Leading a company through that uncertainty, he says, requires flexibility and an appetite for skepticism from others.

“I think sometimes people feel too attached to their ideas, and then kind of feel insecure that if this goes away there won’t be anything next,” he says. “I don’t think I feel that way. I’m still looking for people to challenge us and say this is wrong.”

Post a Comment